Enabling AI Self-Improvement at Scale

How Forth Clover Architected Gulp.ai's LLM Fine-Tuning Pipeline with VeRL and SageMaker HyperPod

Client Overview

Gulp.ai is a San Francisco-based AI company that enables AI self-improvement through real-time reinforcement learning. The Y Combinator-backed startup focuses on unlocking AI agent productivity at production scale by providing the missing piece for truly effective AI systems: the ability to learn from experience.

Gulp.ai addresses the critical gap in deploying AI agents that can continuously improve their performance through real-world interactions.

The Challenge

Gulp.ai needed to integrate SGLang as a backend for model inference during LLM fine-tuning via VeRL, a reinforcement learning framework specifically designed for fine-tuning large language models, to improve GPU utilization efficiency.

The existing LLM training infrastructure lacked efficient model inference capabilities during training processes, leading to suboptimal GPU utilization. Traditional training pipelines often left GPU resources underutilized during inference phases, creating bottlenecks in the training workflow.

Key Requirements:

- Custom Docker image with CUDA, PyTorch, and Python compatibility

- Seamless integration with Amazon SageMaker HyperPod on EKS

- Support for distributed training workloads

- Scalable, predictable, and managed infrastructure solution

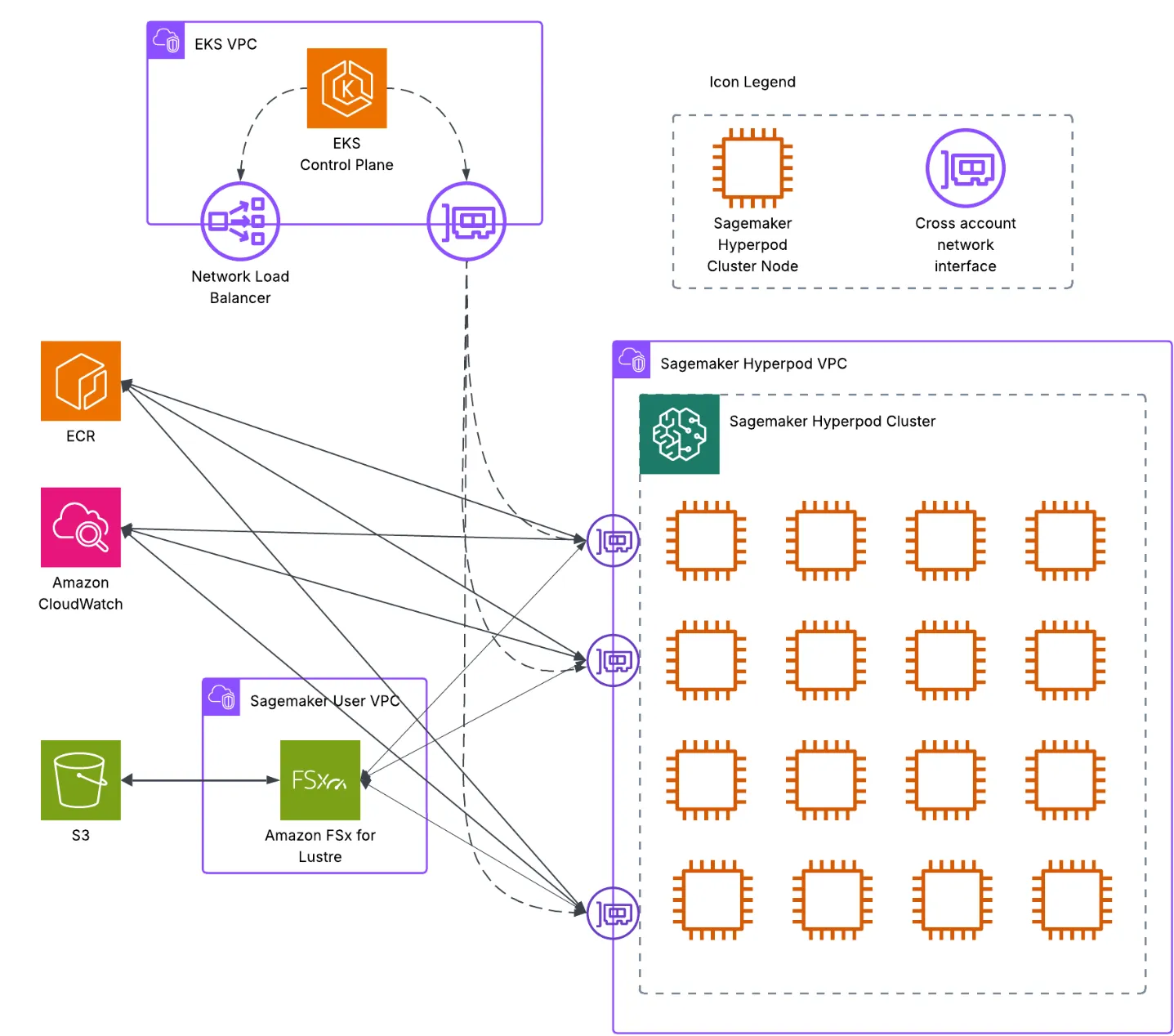

AWS Architecture Solution

Complete AWS architecture for Gulp.ai's LLM fine-tuning pipeline with SageMaker HyperPod

Network Layer

- • Amazon VPC for isolated networking environment

- • Security Groups for controlled access to EKS and HyperPod

- • EFA Support for high-performance distributed training

- • VPC Endpoints for secure AWS service access

Compute Layer

- • Amazon SageMaker HyperPod for orchestration

- • Amazon EKS managed by HyperPod for container orchestration

- • Ray Cluster for distributed computing

- • High-performance GPU instances optimized for ML workloads

Storage Layer

- • Amazon S3 for training data and model artifacts

- • Amazon FSx for Lustre for high-performance file system

- • Amazon EBS for persistent block storage

- • Amazon ECR for container registry

Orchestration Layer

- • SageMaker HyperPod for cluster lifecycle management

- • KubeRay Operator for Ray cluster management

- • Helm Charts for NVIDIA device plugins and EFA drivers

- • Kubernetes Jobs for training job execution

Benefits & Results

Performance Optimization

- • Enhanced GPU utilization significantly

- • Reduced overall training time

- • Optimized memory usage

- • Faster token generation

- • Seamless multi-node scaling

Operational Excellence

- • Managed infrastructure with HyperPod

- • Containerized deployment

- • Infrastructure as Code with Terraform

- • Automated resource management

- • Fault tolerance across AZs

Cost Efficiency

- • Optimized resource usage

- • Reduced operational overhead

- • Flexible deployment options

- • Pay-per-use model

- • Predictable cost management

Developer Experience

- • Simplified Helm-based deployment

- • Comprehensive CloudWatch monitoring

- • Flexible configuration options

- • Focus on model development

- • Reduced infrastructure complexity

Conclusion

The VeRL integration with SageMaker HyperPod transformed Gulp.ai's LLM fine-tuning capabilities, delivering measurable improvements in GPU utilization and training efficiency. By seamlessly integrating SGLang for inference during reinforcement learning fine-tuning, the solution reduced training time while maintaining model quality.

Forth Clover was proud to partner with Gulp.ai to architect this scalable, cost-effective infrastructure that enables them to continuously improve their AI agents through real-world feedback loops at production scale.

Ready to Scale Your AI Infrastructure?

Let's discuss how we can optimize your LLM training pipeline

Build with Us